Workshop on ethics for research and teaching in natural language processing

Organised by the Stockholm, Uppsala and Umeå interest group in ethics for NLP

- Date: 23rd January 2024

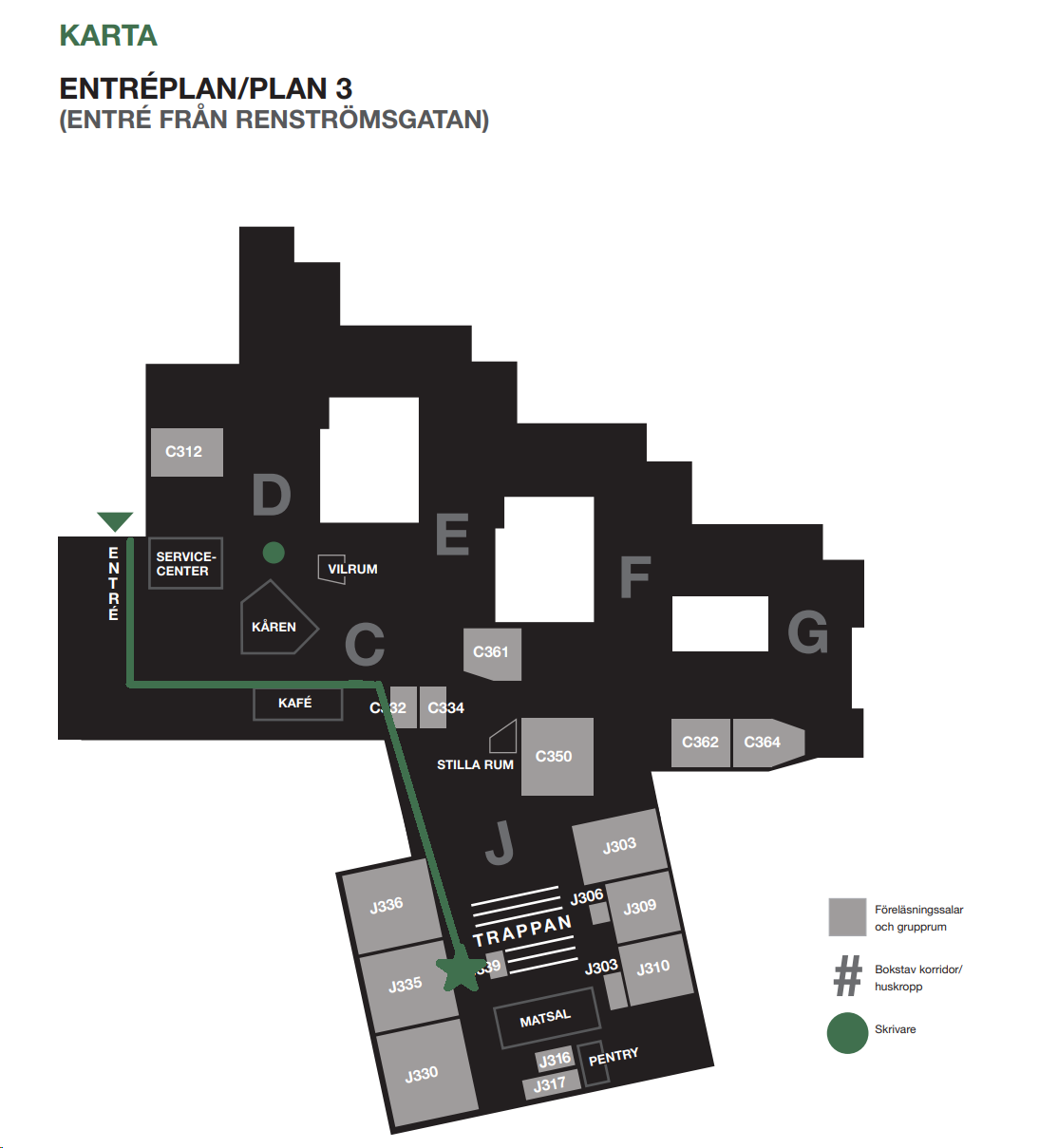

- Location: Humanisten, Department of Philosophy, Linguistics and Theory of Science (FLoV), University of Gothenburg, room J335

- Address: Renströmsgatan 6, floor 3

- Room: J335

Table of Contents

- Programme

- How to find us

- Workshop description

- Invited speakers

- Lightning talks

- Submissions - Note: the deadline for submissions has already past!

- Important dates

- Registration

- Instructions for presenters

- Workshop Organisers

- Local Organisers

- Acknowledgements

Programme

- 10:00 Welcome

- 10:15 Keynote: Kristina Knaving

- 11:00 Break

- 11:15 Keynote: Stefan Larsson

- 12:00 Lunch

- 13:15 Keynote: Juan Carlos Nieves Sanchez

- 14:00 Lightning talks

- 14:00 - 14:10: Iris Guske: Patterns vs particularities: Merging NLP and case study capabilities and responsibilities in life history research

- 14:10 - 14:20: Nikolai Ilinykh: Biases in language-and-vision NLP: where we are and what we might need

- 14:20 - 14:30: Matilda Arvidsson: Legal discretion and the many meanings of law’s language: Challenges and possibilities for ‘NLP-as-law’s-helping-hand’

- 14:30 - 14:40: Thomas Vakili: Privacy in the era of large language models

- 14:40 - 14:50: Tom Södahl Bladsjö: All humans are human, but some ethnicities are more ethnic than others: On model reporting bias with regards to marginalized groups

- 14:50 - 15:00: Q&A session for the lightning talks speakers

- 15:00 Coffee break

- 15:30 Group discussions

- 16:30 Summaries of discussions and final thoughts

- 17:30 Close

How to find us

Workshop description

AI tasks related to modelling human language and that focus on decision making (such as identification of patient diagnoses and driving) have developed substantially over the last several years. In many areas it has been claimed that AI learning from data alone has achieved human-like performance, sometimes even better, but due to the nature of these models it is very hard to inspect directly what such models have learned. Instead, we can only observe their performance, which might be biased in one or more ways, and which has societal and environmental implications.

Language technology (also known as computational linguistics or natural language processing) is an interdisciplinary field between linguistics, psychology, cognitive science and computer science that deals with building computational models which can behave as if they understand natural language. Due to the aforementioned developments, a need has been identified by developers, researchers and teachers of language technology that ethical issues related to data collection, training and usage of such models in various real-life applications should also be addressed. We should equip ourselves as researchers, teachers and students with understanding about the impacts of using such technology or to promote ethically-aware research and utilisation.

Intended participants are researchers, university teachers, masters and PhD students from diverse backgrounds that deal with ethical questions related to development and applications of language technology (language technology and related fields such as computer vision and social robotics, machine learning, philosophy, medicine, law, media, politics, philosophy etc). We foresee an interactive workshop with plenty of time for discussion, complemented with invited talks and presentations of on-going or completed research. Participants are also encouraged to submit extended abstracts (and other materials) that will be shared with others during the workshop and/or presented as posters.

The workshop represents the first forum following the discussions at several Swedish language technology ämnesdagar. Topics that have been addressed there are biases in training data, biases in computational models, personal integrity (anonymisation and pseudonymisation of data), intellectual property rights, using language technology tools to detect non-ethical language use, utilising language technology in under-resourced domains and communities (e.g. minority languages), legal aspects of using language technology, philosophical questions and others. The purpose of the workshop is to further discuss these points from the research perspective and later develop as a short (e.g. 2.5 hec) online course for students of language technology and related fields at the participating sites, as well as general public interested in this area.

Invited speakers

- Kristina Knaving, RISE

- Generative AI, like ChatGPT, DALL-E, and Midjourney, has recently changed our view of what AI can do by entering a traditionally human domain - creativity. What can we truly expect from AI, and what do we want to expect? Kristina will be doing a contemporary and future outlook on AI and generative AI in creative work and society as a whole. There are many opportunities, but also concerns and questions about ethics, democracy, and privacy.

- Kristina Knaving is a senior researcher at RISE, and is responsible for the focus area “The Connected Individual”. She has a background in human-computer interaction, visualization, and decision support. Her research focuses on the opportunities, risks, and ethical issues surrounding personal data and AI, and how new technologies affect individuals and society.

- Stefan Larsson, Lund University

- The Perils of Being Normative: Towards a Socio-Legal Framework on Social Norms and Adaptive Technologies

- While recent progress has been made in several fields of data-intense AI-research, many applications have been shown to be prone to unintendedly reproduce social biases, sexism and stereotyping. As more of design-based, algorithmic or machine learning methodologies, here called adaptive technologies, become embedded in anything from commonly used software to robotics, there is a need for a developed understanding of what role social norms play in the interplay between human expressions and technology, particularly with regards to fairness. In this presentation, Larsson proposes a theoretical framework for the interplay between adaptive technologies and social norms in order to point to the often normative, non-neutral, aspects of developing and implementing adaptive technologies.

- Stefan Larsson is a senior lecturer and Associate Professor in Technology and Social Change at Lund University, Sweden, Department of Technology and Society at LTH. He is a lawyer and socio-legal researcher that holds a PhD in Sociology of Law as well as a PhD in Spatial Planning. He leads a multidisciplinary research group on AI and Society, that studies the impact of AI-supported technologies in various domains, such as on consumer markets, in the public sector, for health, and social robotics.

- Juan Carlos Nieves, Umeå University

- Framework for Trustworthy AI Education

- During this presentation, we will present the Framework for Trustworthy AI Education that was developed during the Erasmus Plus project - Trustworthy AI. The main goal of this Framework is to describe the principles and learning strategies to be followed to develop students’ competencies on Trustworthy AI. Some questions that were approached with the Framework for Trustworthy AI Education are: What strategies are needed for effectively introducing the High-Level Expert Group’s requirements in Higher Education? Which competencies and learning outcomes related to Trustworthy AI should Higher Education students develop? How to assess them? etc.

- Juan Carlos Nieves is an associate professor at the Department of Computing Science, Umeå University (UMU) (Sweden). He is the programme Director of the MSc programme in Artificial Intelligence at UMU. He is the research leader of the Formal Methods for Trustworthy Hybrid Intelligence group, and an affiliated member of the Responsible Artificial Intelligence group at UMU. He has been serving as an external (Ethical) advisor/reviewer in different EU projects. He has also served as an expert reviewer for different European national research councils. He has been an AI-ethical advisor for European initiatives such as EU BonAPPS and for American initiatives such as fAIr LAC of the Inter-American Development Bank.

Lightning talks

- Iris Guske

- The Case for Merging NLP and Case Study Capabilities in Life History Research

- Adept at detecting patterns, NLP algorithms may fall short in capturing singular, context-specific responses critical in life history research. As a variable of, e.g., self-interest, interviewees might withhold/alter information, which would compromise the NLP datsaset. In a case-study setting, meaning can be made of gaps or contradictions in self-reconstructions by transcending discourse units. Likewise, an amalgamation of NLP outputs with data from field notes, letters, diaries, photos, or official documents would provide contextual depth and bridge the gap between overarching patterns and individual narratives.

Case-study methods already necessitate consideration of ethical dilemmas, including informed consent, confidentiality, and trust. Contextual/situational nuances, such as power dynamics or cultural backdrop, affect data quality and have ethical implications. These go beyond NLP’s current focus on mitigating algorithmic biases to ensure fair representation and prevent perpetuation of stereotypes. They also take into account data ownership and transparency beyond the initial text corpus, a prerequisite for secondary research. As that is bound to increase with the rise of NLP, case-study best practices could serve as a model. However, consideration of confidentiality and third-party rights might affect readability and credibility, and even violate the integrity of the text, so the convenience of using NLP on the same dataset in countless secondary research projects should not compromise academic rigour. - Dr. Iris Guske is the Academic Director of the Kempten School of Translation & Interpreting Studies, and she has been involved in aligning Bavarian undergrad T&I Curricula with AI-driven NMT advances. She has a background in Communication Studies and Developmental Psychology and has published books and articles on German-Jewish child refugees, displacement, global educational issues and, more recently, the use of AI in audiovisual translation.

- Nikolai Ilinykh

- Biases in language-and-vision NLP: where we are and what we might need

- In this talk I will provide examples of different types of biases present in the output of languge-and-vision models along with descriptions of some of the existing solutions to mitigate such biases. Additionally, I will also overview a few possible solutions to bias mitigation in multi-modal models. I will take a step back to revisit the definition of ‘‘bias`` as adopted in the language-and-vision community. I argue that the primary motivation for developing a more robust multi-modal agent should come from observing how humans deal with biases – by understanding context, developing reasoning and abstractions. Finally, I emphasise the importance to continually re-evaluate biases within the models. This is crucial as the performance of language-and-vision models could potentially have significant negative societal impacts.

- Nikolai Ilinykh is a doctoral student at the Department of Philosophy, Linguistics and Theory of Science (CLASP group) at University of Gothenburg. His current research primarily revolves around image paragraph generation and building language-and-vision models that are good generalisers with inspiration from human behaviour and human patterns.

- Matilda Arvidsson

- Legal discretion and the many meanings of law’s language: Challenges and possibilities for ‘NLP-as-law’s-helping-hand’

- In my research I have explored questions on legal discretion and decision making (Arvidsson & Noll 2023), the meaning and matter in law’s language (e.g., Arvidsson 2024, Arvidsson 2023), as well as the “human in the loop” (Arvidsson 2020, 2018) in relation to AI. In my brief talk I will relay some of the findings from my previous research and point to challenges and possibilities for using NLP in legal research and practice.

- Matilda Arvidsson is an associate professor of international law and assistant senior lecturer in jurisprudence at the Department of Law, the University of Gothenburg. Her research interests are interdisciplinary and include AI and law, posthumanism and technology, feminism and ethnography, as well as the embodiment of law in its various forms and in inter-species relations.

- Thomas Vakili

- Privacy in the era of large language models

- The NLP community is seeing widespread use of large language models (LLMs) that consist of vast amounts of parameters trained using enormous amounts of data. This combination of parameter count and training data size poses a risk to the privacy of individuals mentioned in the training data of LLMs. In this talk, I will give an overview of these privacy risks and some proposed mitigation strategies. A particular emphasis will be placed on how automatically pseudonymizing training data can reduce privacy risks while preserving their usefulness.

- Thomas Vakili is a PhD student at the Department of Computer and Systems Sciences at Stockholm University. The primary focus of his research is understanding the privacy risks of modern natural language processing practices, and exploring how to make these practices safer.

- Tom Södahl Bladsjö

- All humans are human, but some ethnicities are more ethnic than others: On model reporting bias with regards to marginalized groups

- Human reporting bias is the tendency to omit unnecessary or obvious information while mentioning things that are considered relevant or surprising. This phenomenon affects what a model will learn when trained on human language. In this talk I will discuss how reporting bias relates to and interacts with social biases, and how this phenomenon can help us understand what perspectives, and what norms, are being encoded in models – in other words, whose language we are modeling when we model language. I will present some preliminary findings on reporting bias with regards to marginalized ethnicities in image captioning models, which support the hypothesis that models encode a perspective where whiteness is considered the default for humans.

- Tom Södahl Bladsjö is a master’s student of language technology at University of Gothenburg. His research interests include bias and fairness in AI, subjectivity in ground truthing, and the relationship between model and training data.

Submissions

Submissions of up to 4 pages should follow the ACL formatting template, should be in English and contain full contact information of the presenter(s). Authors are also encouraged to submit any other information that they would like to discuss and share about this topic, in particular if it is relevant for the preparation of the course.

Please upload your submissions (zipped in a file name.surname.zip) with your full contact details here.

Important dates

- Submission of extended abstracts:

1 December 2023 - Notification of acceptance:

22 December - Registration:

8 January 2024It is still possible to sign up to be added to the waiting list!

All deadlines are 11:59PM UTC-12:00 (“anywhere on Earth”).

Registration

Please register for the workshop here by 8 January 2024. Note that even though the deadline is past, it is still possible to register to be added to the waiting list.

Because of its interactive nature, the workshop will be on-site only. However, future events are planned also for online participation. To be informed about these, please subscribe to our mailing list.

You are also encouraged yo join our Discord which we intend to become a meeting point for sharing experiences and materials related to teaching and research in ethics for natural language processing before, during and after the workshop.

Instructions for presenters

- Keynote talks will be 45 minutes.

- 10 minutes of these are intended for questions.

- Lightining talks will be 10 minutes each.

- After each presentation we will take questions during the change of speakers

- After the lightning talks there will be 10 minutes for questions for all speakers.

- We hope that additional discussions will take place during the group discussion in the second half of the workshop.

To enable quicker speaker switching, please upload your presentations slides before the talk as pdf to this shared folder.

- The folder as well as the slides will be accessible from the presentation computer in the room.

- If you would like to update your slides, simply upload a new version with the same filename.

- Please name your slides as lastname_title.pdf to make it easier for us to know which presentation belongs to who.

If you have have any additional presentation requirements, e.g. slides that are not in pdf, playing video and sound, etc., please contact Ricardo in advance.

Workshop organisers

- Hannah Devinney, Umeå University

- Simon Dobnik, University of Gothenburg (contact person)

- Beáta Megyesi, Stockholm University

Local organisers

- Ricardo Muñoz Sánchez, University of Gothenburg

- Maria Irena Szawerna, University of Gothenburg

Acknowledgements

We are grateful for the financial support from:

- the council of vice-chancellors of Gothenburg, Lund, Stockholm, Umeå and Uppsala universities (SLUGU)

- Department of Philosophy, Linguistics and Theory of Science (FLoV), University of Gothenburg

Image by Freepik